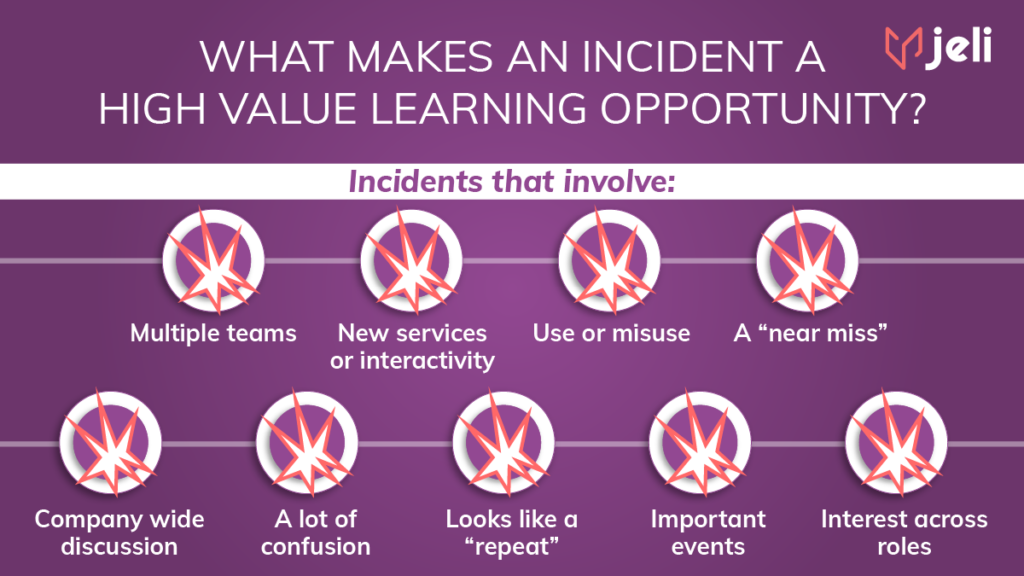

What makes an incident a high value learning opportunity?

This post was originally published on the Jeli blog. Jeli was acquired by PagerDuty in 2023 and we’re reposting it here to bring their thought leadership to our community.

There’s an old saying that the best tool for the job is the one you have. Similarly, the best incident analysis for your organization is the one people will actually complete. There’s no one-size fits all solution. Sure, it’d be great if we could devote 2 weeks to investigate every incident, but that’s probably not realistic.

Each incident is unique. Even if an incident seems similar, or like a recurrence of a past problem, it happened to different people at different times. There is almost always something new to be learned. The time, attention, and effort you spend to analyze an incident should be based on its potential to reveal insights about your organization’s practices, systems, history, or expertise. We don’t want nor need to do the same level of analysis for every incident.

Therefore, it’s useful to develop the skills to recognize which incidents have a greater potential to reveal valuable insights, and which may not be worth spending as much time on. For those that are worth the deeper analysis, we want to squeeze as much as we possibly can out of them and invest the time, attention, and effort we mentioned earlier in order for our organization to learn the most it can. But, how do we develop these insight-spotting skills?

There were multiple (>2) teams involved

Coordination across teams can increase difficulties in incident handling when teams who have never worked together have to do so under stress, uncertainty and time pressure. Difficulties can be amplified if responders lack a shared understanding of how to work together effectively. This can mean being unsure who knows what about different parts of the problem, who has (or does not have) access to needed information, and even simple things like how to get everyone together to jointly work the problem (such as being in different Slack workspaces or on separate Zoom calls). The handling difficulties often increase as the number of different teams, people and software involved increases.

Investigating these kinds of incidents can help identify where sources of deep expertise live within the company – something that may not be represented by organizational charts or team make up. A focus on coordination across teams is a worthwhile investment since research has shown that tacit organizational knowledge is essential to smooth incident response.

A new service or interaction between services was involved

Unanticipated interactions between software components can cause confusion when what a responder thought should happen doesn’t end up happening. In other words, their internal mental models about the system are different than it actually is. In these cases, mental models are partial, incomplete or erroneous – a very common characteristic of working within complex and changing systems.

Investigating these kinds of incidents can help all parties (the responders themselves, readers of incident reports, or attendees at postmortems) update their own similar mental models or share insight that could be helpful to others.

It involved use or misuse of something that seemed simple or uninteresting

Relatedly, mental models can be buggy or faulty due to oversimplifications. The use of heuristics – or mental shortcuts – is common even in expert performance. Software engineering frequently involves building up an understanding of how the system works over time through a collection of experiences of the system under varying operating conditions.

Oversimplifications are readily seen as such in hindsight, after the incident. An investigation should be used to reveal what made sense to the responders to view things as they were. often uncovers multiple other people who thought the same thing! So, these incidents are an opportunity to learn when and how simplifications are used by your engineers and discuss the intended and expected behavior to increase knowledge sharing.

The event involved a use case that was never thought of (indication of a surprise)

All software contains a model of its users -these are the assumptions the developers made about the users skills, capabilities and how they might use the software. Well-designed software attempts to account for unintended use cases by failing gracefully. Poor design causes performance to collapse when the software is used outside of its designed-for operating conditions.

Investigating these kinds of incidents can reveal important insights about how customers use the software, how incident responders reason about unanticipated used and how to best support incident response around failures where there is a high degree of uncertainty.

The event was almost really bad, aka a “near miss”

An “almost incident” is one that may have turned out very differently if not for someone ‘randomly’ noticing a wonky dashboard, or an engineer who ‘just happened’ to be looking at the logs. These seemingly “lucky” misses often reveal important insights into the normal everyday performance of the system. This might be key people who, unprompted, will routinely scan for trouble or someone who listens in on customer support conversations to notice early signals of problems.

Investigating these ‘almost’ events can uncover these helpful behaviors and codify them to enhance resilience. These kinds of incidents are often ones that get brought up in casual conversation – either because of the fear they invoke (‘this could have been so bad!’ or the curiosity it generates ‘I wonder why this didn’t end up being worse?’)

It looks like a “repeat” incident

No one likes to have the same incident twice – it can demoralize engineers, frustrate managers, anger customers or attract the attention of regulators.

Deeper investigations of seemingly repeated incidents can be useful for teasing out subtle but non-trivial differences that made handling the incident particularly challenging for responders. Or, it can aid understanding of what kinds of organizational pressures (like overloaded teams) and constraints (like limitations in the code) prevented a previous corrective action from being applied.

Examples of this we see throughout the industry can commonly include “cert expiries” or “config changes”. Examples like these can commonly reflect a deeper systemic issue going on that enables these errors likely to be frequent.

There is a lot of discussion around the incident in Slack channels or around the office

Ongoing interest or attention on an incident can indicate curiosity, surprise or discomfort with some aspect of the event. The technical details may be interesting or important to other engineering work. It could be this is a long-time latent issue that continues to cause disruptions and is not being dealt with due to organizational factors like – siloing, refusals to collaborate, overloaded teams or differing opinions about the priority of the work needed to address it.

Investigating these kinds of incidents can be excellent professional development for the engineering team to deepen their understanding of different kinds of software or a chance to repair or develop cross-functional relationships.

There was confusion expressed in or around the event

Some degree of confusion about what is happening during an event is normal for complex systems failures. Extended periods of uncertainty, or difficulties forming hypotheses about the source of problems, or potential actions to take to get more information can be a signal of knowledge deficits. However, identifying a knowledge gap as the cause of an incident means important details about onboarding and training, handoffs, code reviews, ongoing knowledge updating and sharing go unexamined.

We especially like to investigate these kinds of incidents to provide micro-learning opportunities3 where participants share their expertise and ask clarifying questions to help improve understanding.

The incident took place during an important event (i.e. an “earnings call”)

Incidents that occur during high visibility events despite testing and proactive mitigations, can be excruciating due to the higher impact on the business.

Investigating these incidents can help restore customer or leadership confidence and help engineering teams cope with potentially traumatic incidents by providing transparent, thoughtful reflections on the contributing factors.

There is interest in investigating it further (e.g. big customer, leadership concern, etc)

Roles outside of engineering (customer support, marketing or senior leaders) may express an interest in understanding more about an event. Engaging the organization in the practice of incident analysis is an excellent way to generate support for post-incident learning activities and encourage different parts of the organisation to contribute their perspectives – further broadening the impact of the time and effort spent investigating.

Learning Is The Goal

While the above represent some promising directions for what to investigate, many companies have their own internal criteria and we see over that time that engineers develop a keen sense of what will provide value to their team or company. Over time, determining what to investigate gets easier and more efficient. The same as with software development: a more experienced engineer can write more valuable code in less time than an engineer just learning the system.As you continue investigating your incidents and near misses, your organization will learn to further refine the kinds of incidents that get investigated and contribute to a more proactive and resilient engineering culture.

For more detailed information on these and other topics, you can always check out the Howie: The Post Incident Guide for more information around Incident Analysis.